Industry Trends & Research

02.09.2023

Can I use ChatGPT for my technical interview?

Jason Wodicka

ChatGPT’s original demos included some tantalizing examples of the model producing Python code from a simple natural language prompt. Since those initial examples, we’ve received many questions about what this means for technical interviews like the ones we conduct. Most commonly, we hear candidates ask, “Can I use ChatGPT in my interview?” But I think the better question is “Should I use ChatGPT in my interview?”

Karat’s R&D team has been monitoring the research and market trends on generative AI for a while, and we’ve also been carrying out some of our own experiments, directly prompting ChatGPT with questions we’ve used for interviews in the past. In the spirit of research and transparency, we’ve published the full annotated results of those interviews HERE. Based on our findings, we’ve updated our FAQ to clarify what’s OK. The updated FAQ is available at the bottom of the page, but in this article, we’d like to talk about the thought process behind the decisions, and why candidates should be especially cautious of relying on external code generators.

This isn’t an unprecedented situation

The arguments being made about ChatGPT aren’t new. In the early 2000s, people predicted that Google was going to fundamentally change how people search for information, and render knowledge irrelevant. Almost ten years later, people were lamenting the rise of Stack Overflow as a quick-and-easy knowledge base for programming questions, and worrying that it would ruin technical interviewing.

People were half-right in both of these cases: both tools have changed the way that day-to-day programming work is done. Stacks of reference books have disappeared from developers’ desks, and being able to frame a good search has become an important job skill in many knowledge fields, including software development. But the doomsday forecasts never came to pass, and with hindsight it’s easy to see why. Problem-solving is a core skill of software development. Reading language references and hoarding O’Reilly books is not. Google and Stack Overflow changed where and how developers learn from each other, but the fundamental work didn’t change.

We see ChatGPT as another iteration of the same pattern. Will it disrupt the day-to-day software development process? Based on what we’ve seen, it seems plausible that it will. Will it invalidate our current methods of interviewing? That’s less plausible, at least when the interviews are well-designed. And one of the ways we ensure that interviews are well-designed is to structure them so that interview participants have access to the customary tools of the craft.

So, can I use ChatGPT in my technical interview or not?

For a long time, we’ve allowed candidates to use Google and Stack Overflow during interviews, and now we’re adding ChatGPT – and any other similar technologies – to that list. The important part is not whether you can use those technologies, but what you can use them for. We tell candidates they’re allowed to use reference materials to answer syntax questions, look up language details, and interpret the often-cryptic error output from compilers. As working developers, we’ve all done those things; so why should we have to struggle without basic resources during an interview? But we ask that they not look for a full solution to the problem, or copy and paste code from elsewhere directly into their solution.

Our problems are crafted to be solvable in the allotted time, and we expect candidates to be able to explain what the code they’re writing does, and why they’ve written it. Referring to other code as a refresher for language details or structure doesn’t invalidate their understanding of the problem they’re solving.

But is it a good idea?

One question we haven’t been asked, in this flurry of inquiries about whether ChatGPT is okay to use in an interview, is whether it’s useful! Based on my own experiments with ChatGPT as a candidate, I’m not confident that it’s generally helpful, except in certain situations. There are a few notable issues to be aware of before using ChatGPT in an interview:

It has no notion of whether it’s correct. If you ask it how to reverse a string in Ruby, it might provide a correct answer. But it might also grab the method that you’d use in Javascript and seamlessly adjust the syntax around it to look more Ruby-like. In my experience using GPT-like tools, I’ve seen both of these scenarios happen. When you’re trying to answer a question, do you really want a guess from ChatGPT, when you can probably find more definitive documentation by using a search engine?

It’s not especially fast. Writing out your question in a way that ChatGPT can understand isn’t instant, and in my experiments, the turnaround time for responses was usually longer than a web search. I also found that I frequently needed to refine my question with one or two exchanges, which added more time. If your question isn’t answerable by a conventional web search, that delay is probably fine, but we’ve already established that there are other tools for developers that are faster and more accurate.

I found one task it handled reasonably well, though, and there may be others:

It provided good human-language summaries of error messages. In general, whenever I asked it what an error message meant, it was able to distill the most important information into an easier-to-read format than what the compiler had printed. I can see it being useful, especially for uncommon or unfamiliar errors, in ways that just searching for the error phrase may not be.

Overall, I see minimal odds of ChatGPT helping someone who doesn’t have the problem-solving skills that the role requires. As I’ve shared in previous writeups, the inability for ChatGPT to create or maintain a mental model of the problem it’s solving will likely do more harm than good if a candidate relies too heavily on its coding capabilities.

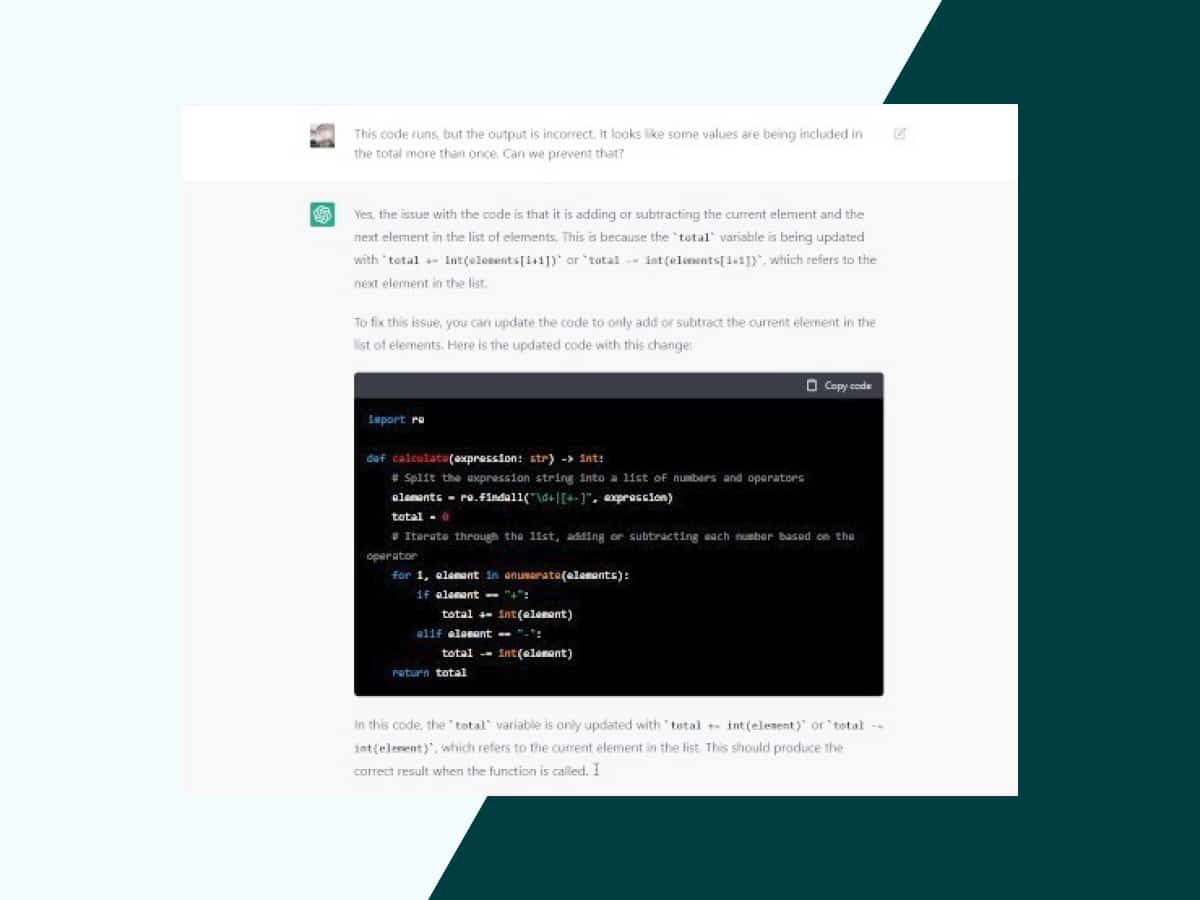

During our interviews, ChatGPT frequently produced replies that were inconsistent – saying one thing while doing another, or claiming to fix an error while leaving the code entirely unchanged. It has no sense that saying “the sky is orange” is false; only that it’s much less likely to be written than “the sky is blue.”

If anyone wants to dig deeper, I’d recommend reading the full interview transcripts. And for quick reference, here is the latest FAQ from the Karat team.

ChatGPT interviewing FAQ for candidates

Am I allowed to use Google, StackOverflow, etc. during the interview?

During the coding portion of the interview, you are allowed to look up documentation just as you would on the job. Let your Interview Engineer know when you are looking something up.

Can I use ChatGPT or other generative AI tools during a Karat interview?

We think of ChatGPT the same way we think of Google or StackOverflow. If you want to look up documentation during the coding portion of an interview, just let your Interviewer Engineer know, and feel free to use ChatGPT. For now, we do not allow the use of ChatGPT to generate an approach or a solution. If it becomes normalized for developers to use AI generation during their day-to-day work, we’ll definitely update our stance. At this stage, most companies expect developers to perform coding tasks without the use of AI.

ChatGPT interviewing FAQ for clients

Can candidates use tools like ChatGPT in a Karat interview?

Karat assessments are designed to test a developer’s real-world technical abilities. Our interviews don’t just test the ability to write code, but to explain their approach to the problem, consider tradeoffs, and take feedback. Given we are testing a candidate’s real-world abilities, candidates are allowed to use tools like Google and StackOverflow to look up documentation during the interview, just as you would on the job. We think about ChatGPT in the same way. If a candidate wants to know the syntax for rounding a number in javascript, they could ask ChatGPT alongside their Interview Engineer in the same way they could ask Google.

As ChatGPT and AI generation develop, Karat will align with the industry on how they will be used in assessments. For now, we do not allow candidates to use ChatGPT to generate an approach or a solution, and ChatGPT cannot be used for debugging, just like external IDEs cannot be used. If it becomes normalized for developers to use AI generation during their day-to-day work, we’ll update our stance, but for the roles candidates are interviewing for right now, we expect them to be able to perform the task without the use of AI.

Related Content

Global Hiring

11.26.2025

The growth of global capability centers (GCCs) in India has exploded over the past few years. Hiring targets for 2024 were more than double that of the U.S., with an average of 790 open roles in India. This represents a 19% year-over-year increase. Real estate is also becoming impossible to find in GCC hubs such […]

AI Hiring

11.21.2025

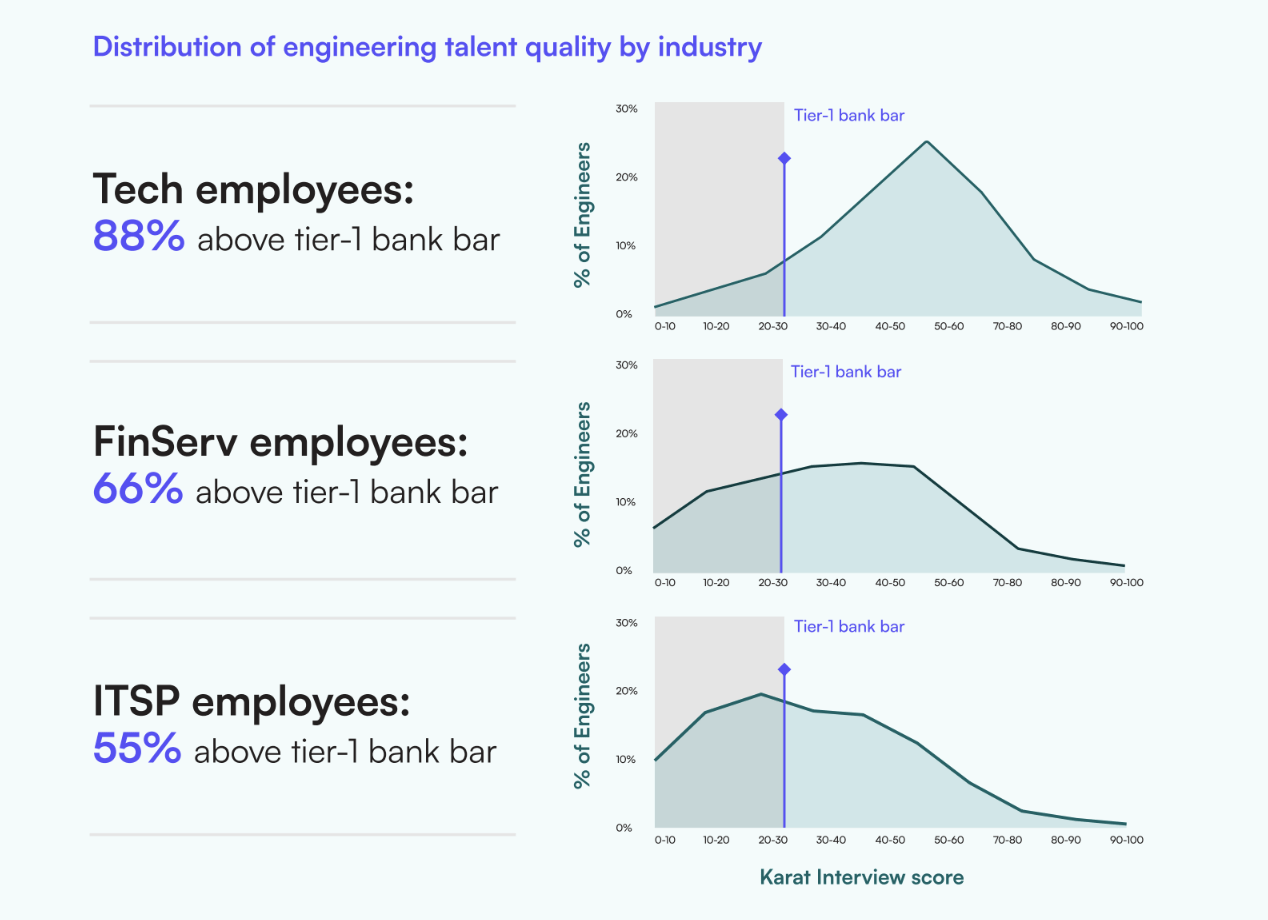

AI is transforming the financial services (FinServ) industry, from trading to digital banking and fraud detection. As demand for digital innovation increases, FinServ organizations are increasingly relying on contractors and IT Service Providers (ITSPs) to deliver rapid progress while minimizing risk. Their expectations are rising at the same time, as engineering teams need to meet […]

Industry Trends & Research

03.19.2025

According to technical interview performance, remote roles are drawing higher concentrations of elite software engineers than any city in the US.